Saturday, April 15, 2017

Wednesday, December 19, 2012

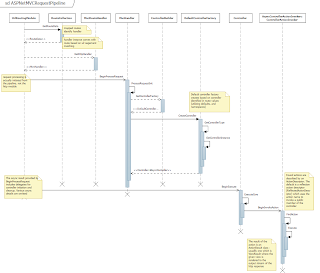

ASP.NET MVC 4 Execution Sequence

I recently took a deep dive into the internals of the ASP.NET MVC code to facilitate a design discussion around web application extensibility. MVC is extremely well suited to extensible use, providing an opening at just about every step in the execution life-cycle

I created a detailed sequence diagram of the action execution path, and something of an overview of the request life-cycle. I have glossed some details - especially concerning alternate paths for asynchronous vs. synchronous execution.

I don't have a lot to add beyond the notes I took while I created a couple of sequence diagrams, but I have shared both the images and the original UML diagrams project for VS 2012 if you may find them useful. If you improve or expand upon them, please let me know. Enjoy.

Wednesday, December 15, 2010

Script Block Type and Dynamic Script Loading

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<script type="text/javascript" src="//ajax.googleapis.com/ajax/libs/jquery/1.4.3/jquery.min.js"></script>

<script language="javascript" type="text/ecmascript">

$(document).ready(function() {$("#target").load("/testcontent.htm");

})

</script>

</head>

<body>

<div id="target">

</div>

</body>

</html>

Simple Content Page:<script type="text/ecmascript">

$("#test").css("background-color", "red");

</script>

<div id="test" style="height:20px;width:20px;">

HI!

</div>

Wednesday, September 16, 2009

Building General Purpose Lambda Expressions

I was fascinated a while back to find the use of the Expression class to build general purpose lambda expressions while researching the use the Repository pattern and the Entity Framework. I didn’t look into it much at the time, but have recently had the time to dig a little deeper.

By general purpose lambda I mean using classes in the System.Linq.Expressions namespace to build a run-time lambda from parameters that let you manipulate which class, method, and properties are used to build the expression executed as a delegate.Person | |

| FirstName | string |

| LastName | string |

| DateJoined | DateTime |

| UserRank | Int32 |

You have an instance of a generic List

We can start to understand what we need if we decide that we’re going to use the Sort operation on List

public enum SortBy

{

FirstName

,LastName

,DateJoined

,UserRank

}

Comparison<Person> fnameCompare =

(x, y) = x.FirstName.CompareTo(y.FirstName);

Comparison<Person> lnameCompare =

(x, y) = x.LastName.CompareTo(y.LastName);

Comparison<Person> dateCompare =

(x, y) = x.DateJoined.CompareTo(y.DateJoined);

Comparison<Person> rankCompare =

(x, y) = x.UserRank.CompareTo(y.UserRank);

We could do a switch statement and sort with the correct delegate. However, with the availability of a "CompareTo" method on every type on which we want to sort, and a common delegate type with basically the same expression syntax, it looks like we can do better.

The goal of our expression building will be to build the expression of the form (x,y) = x.[FieldName].CompareTo(y.[FieldName]) where x and y are Person type input arguments and the CompareTo operation is being executed against the underlying field type.

In order to build such an expression we will need parameters for the delegate, which in this case is an x and y parameter of the type Person since the Comparison delegate signature is

public delegate int Comparison<T>(T x,

T y

)

var xparam = Expression.Parameter(typeof(Person), "x");

var yparam = Expression.Parameter(typeof(Person), "y");

We are also going to need references to the field on each delegate parameter so we can run the CompareTo operation on fields involved:

var xprop =

Expression.Property(xparam, this.SortBy.ToString());

var yprop =

Expression.Property(yparam, this.SortBy.ToString());

All 4 of these statements are creating expressions. In the first case we are creating instances of ParameterExpression, and in the second case MemberExpression. I found it was most helpful to my understanding that each concrete type of Expression is adding a node to the parse tree. As such, picking the right type of Expression to represent what you want is key to building the pieces of your overall lambda expression.

Note in the given expression, a local field with the SortBy enum has values equal to the field names on the target type – this is a stand-in for expressing a string field name.

Since we now have our delegate parameters, and the participating fields from those parameters, we can build the call to CompareTo. Since we want to add a method call to the tree, we will build a MethodCallExpression using the static Expression.Call helper method:

BindingFlags flags = BindingFlags.FlattenHierarchy |

BindingFlags.ExactBinding |

BindingFlags.NonPublic |

BindingFlags.Public |

BindingFlags.Instance;

MethodInfo info = xprop.Type.GetMethod("CompareTo", flags, null, new Type[] { xprop.Type }, null);

var methodCall = Expression.Call(xprop,info,yprop);I used an overload that takes a MethodInfo because the underlying FindMethod used in the Expression class does not specify "ExactBinding" and fails because it also finds the CompareTo overload with the object type parameter. Also note that the ParameterExpression instance xprop has a handy Type field that provides the type of the referenced field on the MemberExpression type to which it was bound.

With these 5 expressions ready to go all we need to do is generate a lambda expression of the appropriate delegate type and compile it:

var sortExpression = Expression.Lambda<Comparison<Person>>(

methodCall

, xparam

, yparam);

return sortExpression.Compile();

Place this code in a method with a return type of Comparison<Person> which could be called to retrieve the delegate and sort your list:

http://rogeralsing.com/2009/03/23/linq-expressions-from-and-to-delegates/

Nate Kohari’s use in Community Server 5’s REST API: http://kohari.org/2009/03/06/fast-late-bound-invocation-with-expression-trees/ and the related Rick Strahl entry: http://www.west-wind.com/weblog/posts/653034.aspx

http://www.codeproject.com/KB/recipes/Generic_Sorting.aspx

http://www.codeproject.com/KB/architecture/linqrepository.aspx

Monday, August 17, 2009

VS 2008 SharePoint Workflow Templates on x64

As acknowledged by MS in the readme file, the SharePoint workflow project templates for sequential and state machine workflows do not work on an x64 machine.

The error is blunt and unhelpful: “A 32-bit version of SharePoint Server is not installed. Please install a 32-bit version of SharePoint server.”

The hurdle to developing a SharePoint workflow in no way requires such involved effort.

Instead:

- Create a standard WF workflow from the given .Net 3.0 template of your choice

- Add a file called feature.xml with the following content:

<?xml version="1.0" encoding="utf-8" ?>

<Feature xmlns="http://schemas.microsoft.com/sharepoint/"

Id="My generated unique GUID"

Title="My Workflow"

Description="This feature creates my workflow."

Version="1.0.0.0"

Scope="Site">

<ElementManifests>

<ElementManifest Location="workflow.xml"/>

</ElementManifests>

</Feature> - Add a file called workflow.xml with the following content:

<?xml version="1.0" encoding="utf-8" ?>

<Elements xmlns="http://schemas.microsoft.com/sharepoint/">

<Workflow

Id="726DC49B-B3DE-455d-8ACB-A8CA86894F87"

Name="Expense Report Approval Workflow Template"

Description="This workflow template enables expense report approvals and payment"

CodeBesideClass="MyWorkflowNamespace.MyWorkflowClass"

CodeBesideAssembly="MyWorkflow,Version=1.0.0.0, Culture=neutral, PublicKeyToken=MySigningKeyToken">

<Categories></Categories>

<MetaData></MetaData>

</Workflow>

</Elements> - Create a folder structure in your project for TEMPLATE\FEATURES\MyFeatureFolder

- Add a file called install.bat which automates the tasks of gac registration, feature file copying, feature installation, and IIS resetting. Set this file to be called by a post-build action. The file should contain something like:

@SET TEMPLATEDIR="C:\Program Files\Common Files\Microsoft Shared\Web Server Extensions\12\TEMPLATE"

@SET GACUTIL="C:\Program Files (x86)\Microsoft Visual Studio 8\SDK\v2.0\Bin\gacutil.exe"

@SET STSADM="C:\Program Files\Common Files\Microsoft Shared\Web Server Extensions\12\BIN\STSADM"Echo Installing in GAC

%GACUTIL% -if bin\debug\MyWorkflow.dllEcho Copying files

xcopy /e /y TEMPLATE\* %TEMPLATEDIR%Echo Installing feature

%STSADM% -o installfeature -filename MyFeatureFolder\feature.xml -forceECHO Restarting IIS Worker process

"c:\windows\system32\inetsrv\appcmd" recycle APPPOOL "SharePoint Apps" - Add an OnWorkflowActivated activity to your workflow from the SharePoint activities in your toolbox.

- Optionally change the base class on your workflow program class to SharePointSequentialWorkflowActivity

- Add the following field declarations to your workflow class:

public Guid workflowId = default(System.Guid);

public SPWorkflowActivationProperties workflowProperties = new SPWorkflowActivationProperties(); - Set the WorkflowProperties property on your OnWorkflowActivated activity equal to your new workflowProperties field.

There is another workaround posted.

I won’t hold my breath, but you can also go vote on the bug in hopes that perhaps a patch will be released. I also imagine that packages for VS ‘10 will not have this problem.

Monday, July 06, 2009

LINQ, Persistence Ignorance, and Testing with Data Context/Entity Framework

One thing I really try to avoid when writing tests is testing things that just aren’t going to break; or at least are of no concern to the application code I wrote. Database IO certainly fits this description, and yet I have found myself testing it by consequence of the desire to test Linq-based statements embedded in the data access code.

Since other people have encountered and attempted to solve the same problem, a few hours of reading showed that in order to remove database IO testing from my tests, and to improve the design of the data access layer, it is necessary to implement some pattern of persistence ignorance like the Repository.

In short form a repository allows business code to ask questions of the data layer without knowing anything more than the interfaces of data-storage objects. Want all the customers with outstanding invoices? Get a list of ICustomer objects back from the CustomerRepository.CustomersWithOutstandingInvoices method. As a business layer developer I have no idea what you did to return this list of customers, and don’t care. You don’t expose your DataContext (or other DAL implementation) to me, and I don’t write LINQ directly against the Customers table because the management of the DataContext and particulars of locating outstanding invoices may be too wonderful for me to know. Pretty standard separation of concerns, and very simple to implement. There are a few unique issues in a LINQ-centric world:

- How can I take advantage of delayed execution?

- How can LINQ best be supported outside the Repository

- How can I mock-up data-centric tests when I don’t have a database to execute against?

If you hide LINQ execution behind a repository you don’t really want to provide access to ObjectQuery objects or hand out Table<T> references, as you are tied immediately back into what you were hiding. This being the case you really need to execute the queries before they leave the repository*. Performance should come from good caching techniques, and by limiting the scope of queries through tight definition of access to the data.

*update* It isn't really necessary to disconnect the query in any way that breaks delayed execution. You can maintain all the benefits and still hand back an IQueryable<T>. However, it would then be up to developer discipline to not do further manipulation that takes advantage of the underlying data provider's specific types.

LINQ can work on any IQueryable, so handing back objects that can be used in LINQ syntax queries outside the context is as easy as handing back List<T>, or IQueryable<T> references. Referring back up to issue 1 above, these post-repository queries will be disconnected from the database.

When it comes to testing, we played around with a number of different mocks and tricks to fool LINQ into executing disconnected; fake data contexts, entities without db connection strings, overrides and events that modify queries, etc. It turns out that the simplest way (in my view) is to use the repository to simply remove the database from the equation – that was after all the goal of this whole exercise. However, rather than go with a repository directly exposed to business logic code, I prefer to put what I will call a Provider in front of the repository. This ‘Provider’ is really just another sort of repository as some envision the concept, except that it contains all the LINQ queries and specific entity related methods and takes an IRepository implementation that simply has CRUD operations exposed with internal knowledge of the DAL implementation contained in this simple repository. Why?

- It’s easier to create a general purpose IRepository interface for use in any project.

- With a simple IRepository interface you can create a simple general-purpose FakeRepository for use in testing.

- Once you can have LINQ statements dependent upon a single interface, you can inject the dependency and thereby replace the source of data during testing. You can test your LINQ query logic without going to the database.

- The logic used to obtain entity objects from methods like “CustomersWithOutstandingInvoices” is still removed from knowledge of the DAL, moving persistence ignorance as far up as we can.

How does this all come together?

- You create a concrete repository that has a concrete ObjectContext or DataContext.

- With EF ObjectContexts (my preference) you implement the Get<T>() functions on your repository by using CreateQuery methods:

public IQueryable<T> Get<T>() where T : class

{

return _context.CreateQuery<T>(typeof(T).Name)as IQueryable<T>;

} - You create a concrete ‘Provider’ that takes an inject-able IRepository object in the constructor.

- Write your Provider LINQ queries against Get<T>() statements from the repository

- Write tests against your provider, inject a FakeRepository that has lists as it’s data source. Add objects to your Provider in test setup and confirm proper retrieval/manipulation in tests.

You can download our assembly with an IRepository/FakeRepository implementation from:

http://atgitesting.codeplex.com/

Inspiration and understanding from:

The Repository Pattern Explained

Andrew Peters’ Blog » Blog Archive » Fixing Leaky Repository Abstractions with LINQ

Diego Vega - Unit Testing Your Entity Framework Domain Classes

Dynamic Queries and LINQ Expressions - Rick Strahl's Web LogFriday, April 17, 2009

Working with detached entities and Linq to Sql

public static class DCExtensions{

public static void SafeAttach<T>(this DataContext context

, T entity)

where T : class

{

Table<T> entityTable = context.GetTable<T>();

if (entityTable == null)

return;

T instance = entityTable.GetOriginalEntityState(entity);

if (instance == null)

{

if (!entityTable.Contains(entity))

entityTable.InsertOnSubmit(entity);

else

entityTable.Attach(entity);

}

}

}

I can then have a method like the following that isn't concerned with entity state:

internal ChildObject AddAssociation(ParentObject1 parent1

, ParentObject2 parent2)

{

using (var dc = new MyDataContext())

{

dc.SafeAttach(parent1);

dc.SafeAttach(parent2);

ChildObject child = new ChildObject();

child.Parent1 = parent1;

child.Parent2 = parent2;

dc.ChildObjects.InsertOnSubmit(child);

dc.SubmitChanges();

return child;

}

}